3D Human Pose Estimation via Intuitive Physics

Shashank Tripathi, Lea Müller, Chun-Hao Paul Huang, Omid Taheri, Michael J. Black, Dimitrios Tzionas

CVPR 2023

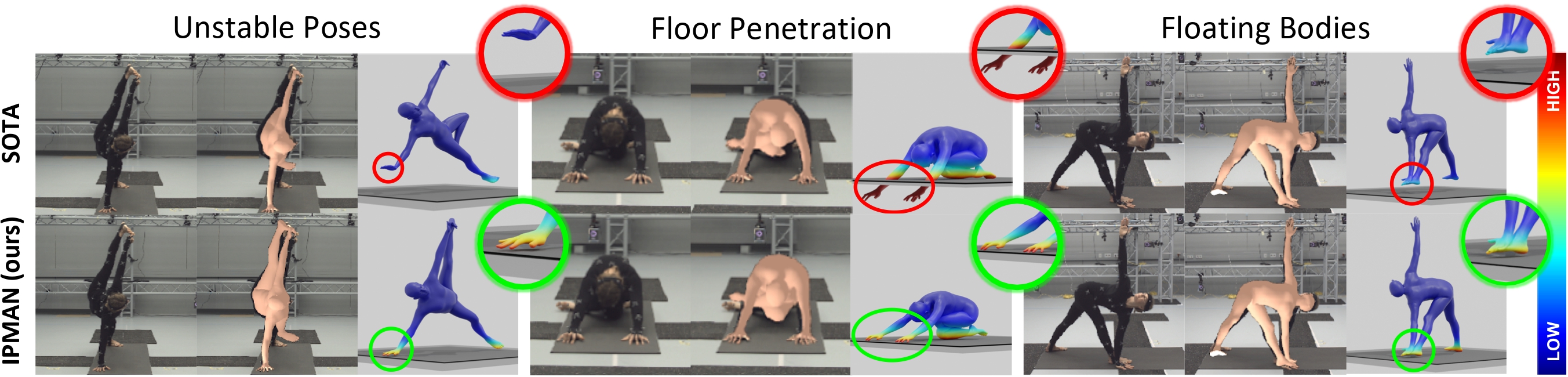

Estimating a 3D body from an image is ill-posed. A recent, representative, optimization method produces bodies that are in unstable poses, penetrate the floor, or hover above it. In contrast, IPMAN estimates a 3D body that is physically plausible. To achieve this, IPMAN uses novel intuitive-physics (IP) terms that exploit inferred pressure heatmaps on the body, the Center of Pressure (CoP), and the body’s Center of Mass (CoM). Body heatmap colors encode per-vertex pressure.

Estimating 3D humans from images often produces implausible bodies that lean, float, or penetrate the floor. Such methods ignore the fact that bodies are typically supported by the scene. A physics engine can be used to enforce physical plausibility, but these are not differentiable, rely on unrealistic proxy bodies, and are difficult to integrate into existing optimization and learning frameworks. In contrast, we exploit novel intuitive-physics (IP) terms that can be inferred from a 3D SMPL body interacting with the scene. Inspired by biomechanics, we infer the pressure heatmap on the body, the Center of Pressure (CoP) from the heatmap, and the SMPL body’s Center of Mass (CoM). With these, we develop IPMAN, to estimate a 3D body from a color image in a “stable” configuration by encouraging plausible floor contact and overlapping CoP and CoM. Our IP terms are intuitive, easy to implement, fast to compute, differentiable, and can be integrated into existing optimization and regression methods. We evaluate IPMAN on standard datasets and MoYo, a new dataset with synchronized multi-view images, ground-truth 3D bodies with complex poses, body-floor contact, CoM and pressure. IPMAN produces more plausible results than the state of the art, improving accuracy for static poses, while not hurting dynamic ones.

Data and Code

Please register and accept the License Agreement on this website to access the IPMAN downloads.

When creating an account, please opt-in for email communication, so that we can reach out to you via email to announce the release, as well as potential significant updates.

Code: The training and evaluation code for IPMAN-R is available. Please click on the "code" button at the top of this page.

MoYo dataset: The dataset is already available. Please click on the "data" button at the top of this page.

Video

Publication

Referencing IPMAN

@inproceedings{tripathi2023ipman,

title = {{3D} Human Pose Estimation via Intuitive Physics},

author = {Tripathi, Shashank and M{\"u}ller, Lea and Huang, Chun-Hao P. and Taheri Omid and Black, Michael J. and Tzionas, Dimitrios},

booktitle = {Conference on Computer Vision and Pattern Recognition ({CVPR})},

pages = {4713--4725},

year = {2023},

url = {https://ipman.is.tue.mpg.de}

}

Acknowledgments

We thank Tsvetelina Alexiadis, Taylor McConnell, Claudia Gallatz, Markus Höschle, Senya Polikovsky, Camilo Mendoza, Yasemin Fincan, Leyre Sanchez and Matvey Safroshkin for data collection, Giorgio Becherini for MoSh++, Joachim Tesch and Nikos Athanasiou for visualizations, Zincong Fang, Vasselis Choutas and all of Perceiving Systems for fruitful discussions. This work was funded by the International Max Planck Research School for Intelligent Systems (IMPRS-IS) and in part by the German Federal Ministry of Education and Research (BMBF), Tübingen AI Center, FKZ: 01IS18039B.

Disclosure

MJB has received research gift funds from Adobe, Intel, Nvidia, Meta/Facebook, and Amazon. MJB has financial interests in Amazon, Datagen Technologies, and Meshcapade GmbH. While MJB is a consultant for Meshcapade, his research in this project was performed solely at, and funded solely by, the Max Planck Society.

Contact

For questions, please contact ipman@tue.mpg.de

For commercial licensing, please contact ps-licensing@tue.mpg.de.